I make my way down the pedestrian avenues of the Swiss Federal Institute of Technology Lausanne (EPFL) campus, where I meet with Antoine Bosselut, specialist in artificial intelligence and multilingual issues for large language models (LLMs). These artificial intelligence systems, packed with billions of units of data, can answer any number of questions, in a similar manner to ChatGPT. The 34-year-old professor, born in France and educated in the United States, knows his fair share about creating machines that can master languages from Tibetan to Romansh. He is one of the fathers of the new Swiss AI model, Apertus.

Its algorithms are freely accesible

In early September, the two Swiss institutes of technology and the Swiss National Supercomputing Centre (CSCS) announced the launch of the first multilingual open-source LLM developed in Switzerland. “Apertus represents a major step forward in transparency and diversity for generative artificial intelligence,” its creators say. What makes this LLM different from Llama 4 (developed by Meta), Grok (produced by Elon Musk) or even ChatGPT, which is an entire AI system? The elements making up the Swiss system – its algorithms and computation parameters – are freely accessible. Instructions are provided, whereas ChatGPT (for example) remains an opaque commercial model.

Another difference is that Apertus is not a general system. “Commercial models are not sufficiently specialised for certain specific purposes; however, the more specialised AI is, the stronger it becomes,” explains Bosselut.

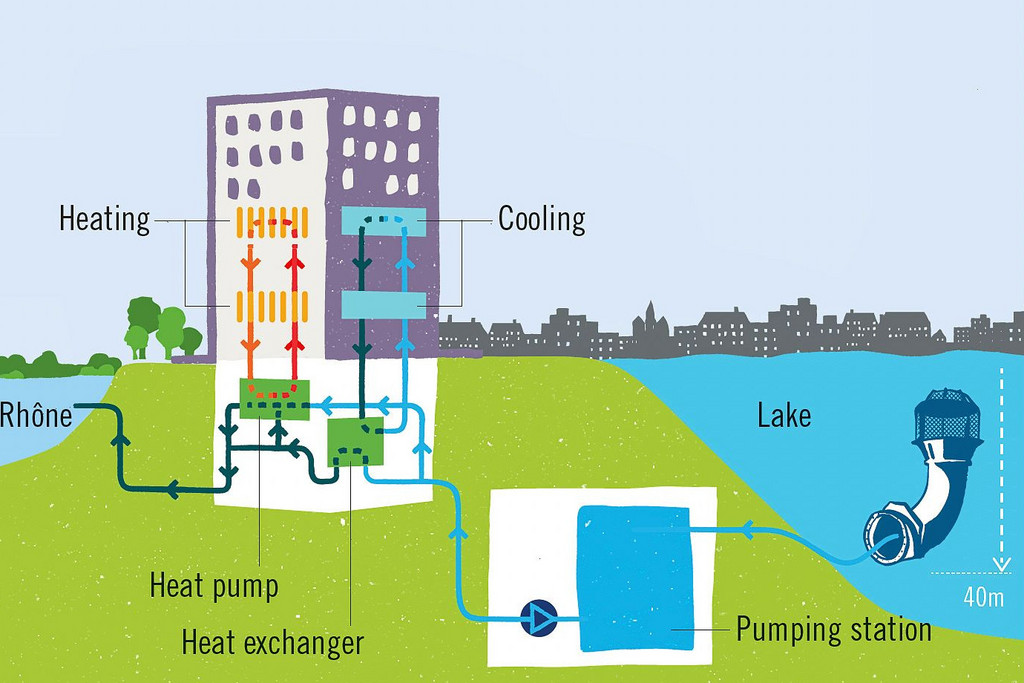

Hospitals could use Apertus as a tool – its algorithms and its computation system – for training systems to analyse thousands of radiographs. The AI is capable of comparing data and detecting differences barely visible to the human eye.

The search for secure data

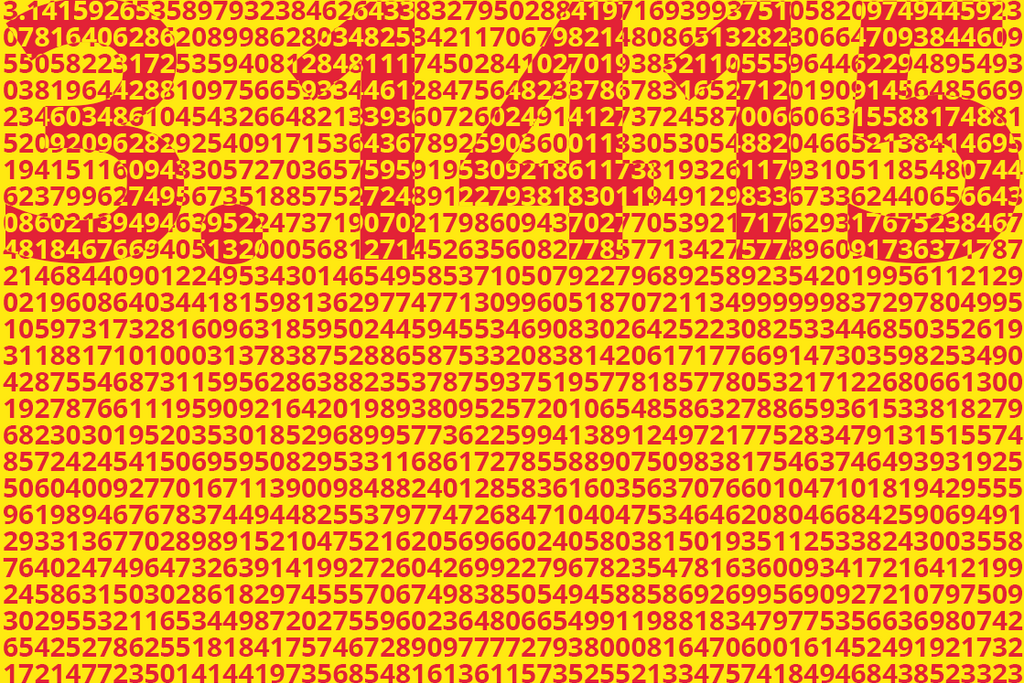

The CSCS supercomputer trained Apertus using billions of data items found online. This data constitutes an LLM’s basic lexicon. For this model, data was only used when the owners did not expressly forbid the use of “crawlers”, robots that scrape the web for content, according to the EPFL. “If, for example, the ‘New York Times’ were to block access to its articles from certain crawlers, we would exclude it as a source for our data,” the professor says.

Apertus’s training was based on 15 billion words taken from 1,800 languages (there are approximately 50,000 billion words on the internet). In this case, the creators of the LLM guarantee future users – such as businesses – that the data is reliable in the ethical and legal sense of the term, in contrast to the commercial stakeholders in AI, who refuse to publish their training data.

Comments